Overview

Through video and panel exhibits, this project takes on the challenge of science communication that allows us to think and talk about the impact of generative AI on people and society from multiple perspectives.

You will watch videos of three professionals, poet TANIKAWA Shuntaro, OZAWA Kazuhiro of the comedy duo Speedwagon, and OBA Misuzu, who has written a book based on her child-rearing experience. Each, try to reproduce their own tacit skills by using generative AI (ChatGPT), which is attracting growing social interest.

In addition, you can also look at panel exhibits organizing the question, "What is human intelligence, intellect, and creativity?" which is being debated once again due to the spread of generative AI.

Objectives

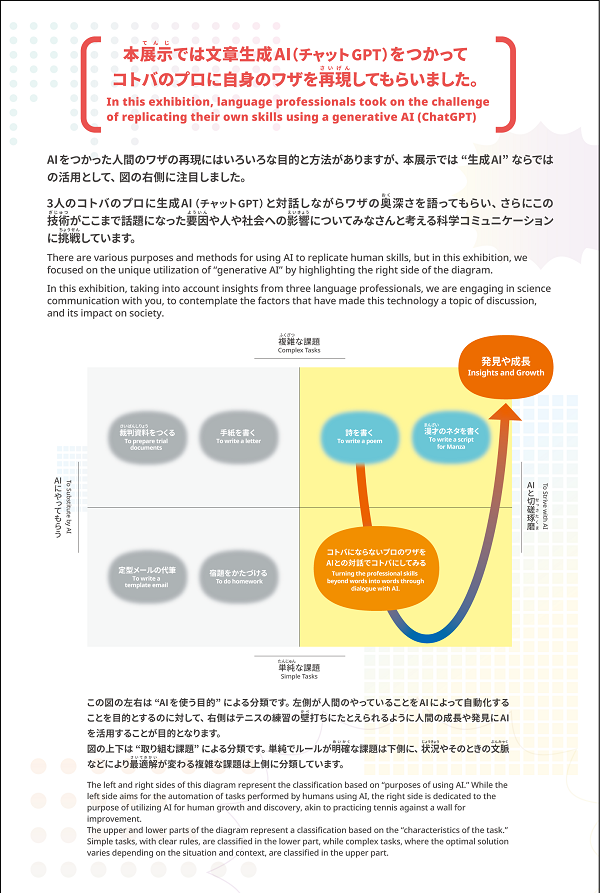

- Before entering, we strongly recommend looking at the explanatory panels on the left and right sides of the entrance gate that display the purpose and concept of this project. On the right-hand panel, as to the purposes why three professionals (TANIKAWA Syuntaro, OZAWA Kazuhiro, and OBA Misuzu) used generative AI (ChatGPT). They are organized in a chart.

In this project, rather than replacing professionals with generative AI, these experts engaged in dialogues with AI, by examining the contexts of instructions (prompts) to mirror their skills and techniques.

Curator's NoteCloseCloseCloseWhen considering how to instruct generative AI to mirror what we do daily, we realize that we are making more complex decisions than we thought. For instance, how do you teach AI if a joke is appropriate to say? It should be different depending on whether the listener is your friend or boss.

Just like the techniques of professionals, "how you make decisions based on individual situations" cannot be expressed in words and may remain ambiguous, not only to others but also to yourself. Through the use of generative AI, what are behind your decisions may be revealed, such as your moral/values, sense of judgement, and your point of view. I hope this exhibition can help you to experience your own, and others’ thoughts that haven’t been realized before by putting into words your expectations and concerns about AI, as well as your own skills.

Written by SAKUMA Koki

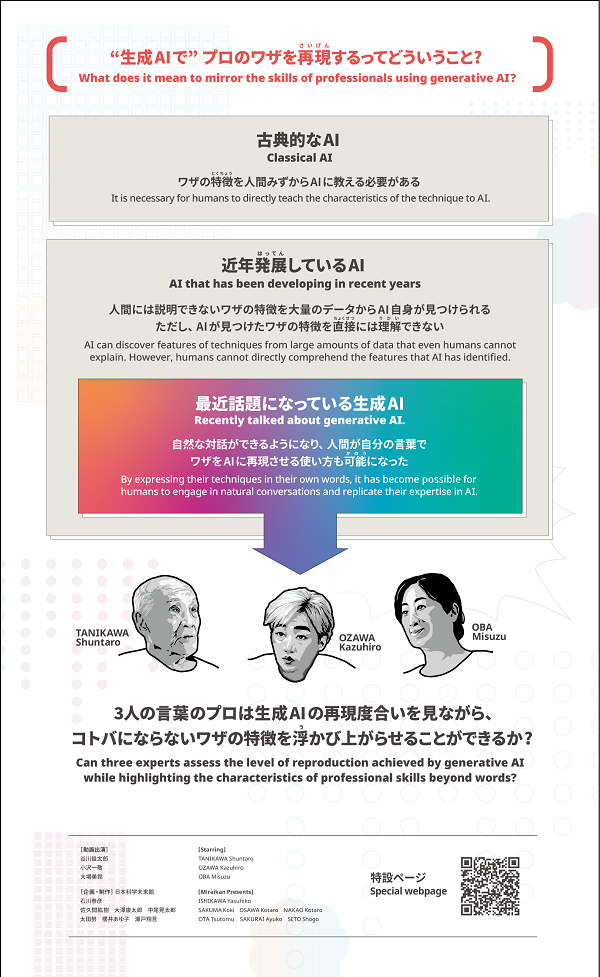

- When we view the history of efforts to mirror human skills through artificial intelligence (AI), in classical AI, it was necessary to instruct one by one, specifying how they wanted things to be done in each situation and how AI should respond if certain conditions were met. As a result, handling complex judgments and exceptions was very challenging.

As research has advanced and deep learning has emerged, there is no longer a need for humans to directly instruct the characteristics of skills. AI itself has become capable of finding features in large amounts of data, features that a person may not even be aware of, thus leading to a significant improvement in the fidelity of skill replication.

However, humans cannot comprehend the features that AI has discovered from data through deep learning. Even the individuals who designed the AI, despite its ability to replicate convincing text or images, often struggle to explain why it works well. This is known as "black box problem".

Recently, there has been a lot of talk about generative AI like ChatGPT, which is a type of deep learning models referred to as "a large language model (LLM)". One of its significant features is the ability to receive instructions in natural language. Compared to conventional AI, it has a higher understanding of instructions and can respond flexibly. This enables the use of generative AI to mirror human skills by repeatedly providing instructions.

Please take a look at what professionals in language think and feel when they see AI's responses to their own instructions.

Curator's NoteCloseCloseCloseThere has been extensive media coverage on ChatGPT and Midjourney (a text-to-image AI service), which can be considered synonymous with generative AI. As we investigate the features and underlying technology of these systems, we have come to realize that we had misconceptions and confusion about them.

For discriminative AI, which is often contrasted with generative AI, deep learning brought rapid improvements in accuracy in the field of image recognition in 2012.

The recent breakthroughs in generative AI (though generative AI itself existed before) are also supported by deep learning. However, even generative AIs that use deep learning have various mechanisms, and what they can do and what concerns they raise can vary widely.

In this project, we have chosen to focus on large language models, specifically ChatGPT, which has gained widespread popularity as a conversational AI, to ensure that the discussion about the possibilities and concerns of this technology does not diverge too much.

On the flip side, technologies and applications of generative AI are expanding daily beyond what is covered here. We hope that this project can serve as a "starting point" for exploring this fascinating field.

Written by NAKAO Kotaro

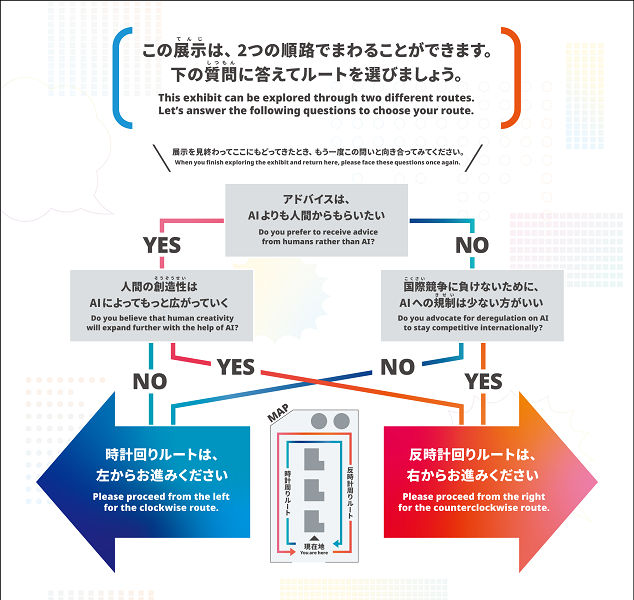

- As you pass through the gate, you will see a panel in front of the venue with a series of yes-or-no questions. Whether you have used ChatGPT before or not, whether you have heard of generative AI or not, and we encourage you to use your intuition and answer the questions. Your answers will guide you to either a clockwise or counterclockwise viewing route.

By talking to people who are viewing the exhibition in the opposite direction, or by facing those questions again when you return to the exhibition, you will deepen your relationship with generative AI.

Curator's NoteCloseCloseCloseThe idea of the panel with separate entrances depending on the answers to the questions was inspired by the movie "The Square" (the winner of the Palme d'Or at the 2017 Cannes Film Festival). The way the impression changes depending on the route of the tour was inspired by the "Let's turn it over" ad (Sogo & Seibu Co., Ltd.) for the New Year 2020. And the combination of the left and right routes creates an interplay that cannot be explained in a single line or by two choices, and was inspired by UCHINUMA Shintaro's book, "Works that Creates the Future of Books / A Book that Creates the Future of Works" (2009, in Japanese). It takes a bit of courage to shake up one's way of looking at things or to leave one's own ideas, but I believe that there will be new encounters and learning (and unlearning) at the other end of the border.

Written by ISHIKAWA Yasuhiko

Video Exhibition

There are voices suggesting that the tasks of creators such as illustrators, composers, and scriptwriters may be replaced by generative AI. The three participants in these videos contemplated both the concerns about how generative AI might affect their sense of purpose and work, and the potential that could emerge from engaging with this new technology. With a mix of caution and curiosity, they interacted with generative AI (ChatGPT) and shared their own skills.

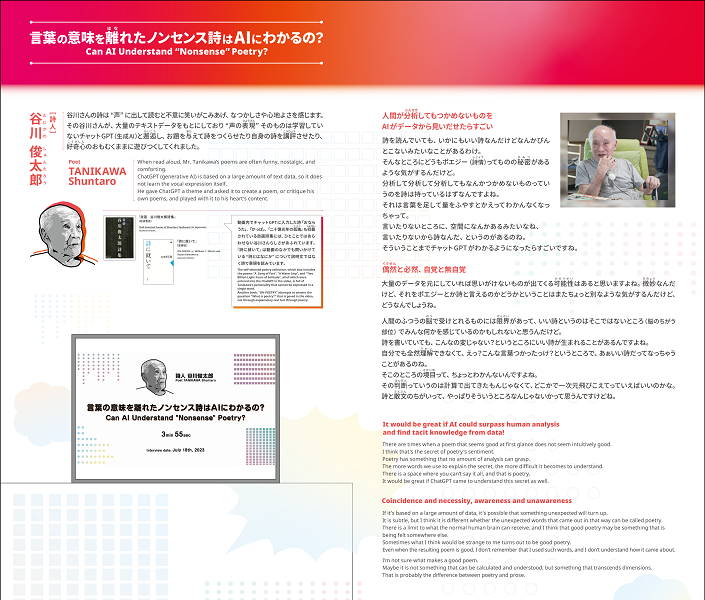

A poet, TANIKAWA Shuntaro continues to take on unconventional challenges in the world of poetry. He is highly interested in science and technology. He has contributed poems to Miraikan's planetarium programs, has held a dialogue with AI researchers.

When we approached him about this project, he made a proposal based on the premise of applied AI, saying, "Why don't you let AI learn all of my past works?”

When we explained that we wanted him to experience ChatGPT, one of the large language models that enables natural sentence generation by learning a large amount of text data on the Web, not limited to TANIKAWA's works, he asked, "Does it learn sounds in addition to text? Does it understand onomatopoeic words?" and "How does it use Chinese Character and Hiragana (Japanese Character) differently? How about English and Japanese?" And even more, "Does it understand expressions that are not based on meaning, such as in non-sense poetry?" The questions were filled with the kind of persistence that only a poet would have, and we could feel the passion of a poet's curiosity and pride in being a professional.

He also said that the exhibition, in which his poems were secondarily sculpted by the exhibitors, was like “a part of series of poems”.

In this project, we were guided by his words and the way he earnestly played with the ChatGPT. In editing the live performance into a video of a few minutes, our goal was to make it seem like “a part of series of poem" to himself and to the audiences.

TANIKAWA Shuntaro has written more than 2,000 poems. Many Japanese have probably encountered his works in picture books or elementary school textbooks.

Since my image of him was largely based on such encounters, I was thrilled to find a line in his poem "Smile " that reads, "Poetry passes through textbooks at a bicycle-like speed.

As he experienced ChatGPT, I heard him say, "ChatGPT, you are so serious", and "You are like an honor student". And I realized that I had been looking for "answers" to poems, such as meanings and conclusions.

At the same time, I also realized that I must not have been like that from the beginning, and that the social nature of school may have kept children and teachers from enjoying the sound of poetry itself and surrendering themselves to feelings that cannot be expressed in words.

When Mr. Tanikawa ordered ChatGPT to "try writing a poem as a 6-year-old child," he said that he tries to reach his own infantile nature by putting himself in the mood of a child when he writes a poem.

Now, I might as well get off my bicycle (putting the answer aside) and love the roadside grass with a child's mind.

Written by ISHIKAWA Yasuhiko

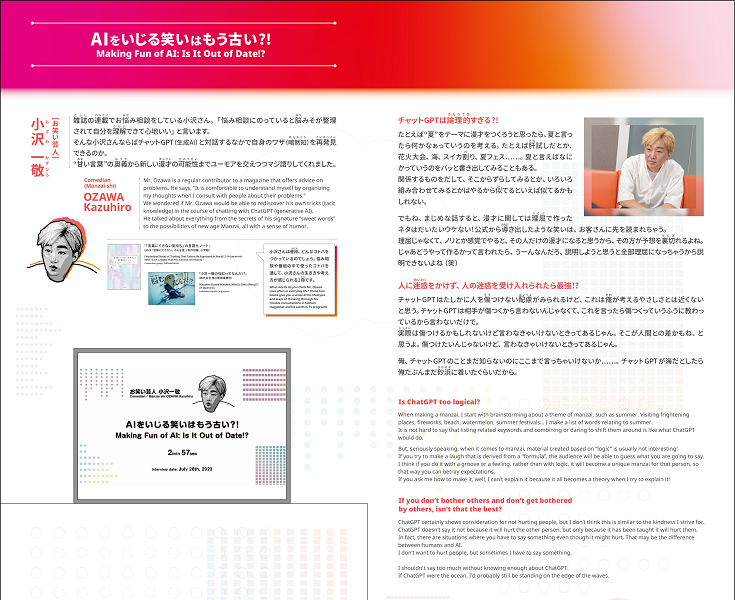

In his book, Mr. Ozawa says, "I can understand myself when I consult with others". We made an offer to him as we thought he would be the perfect person for the purpose of this project, which is to deepen understanding of one's own tacit knowledge through dialogue with the ChatGPT.

In the process of trying to make the ChatGPT generate “manzai” and “sweet words (he is famous for this)”, many of his unique senses were revealed. When we told him that "ChatGPT is connecting words (tokens) based on contextual probabilities", he told us that he himself sometimes creates manzai in the same way, by associating keywords with a theme.

On the other hand, he also says that "combinations of words with a high probability" cannot betray the expectations of the audience and are not funny as they are. From there, he goes further and describes how to make manzai as "laughter that betrays the audience's imagination by shifting the pattern shared by everyone" and "laughter that plays with emotion by using the groove and the unique senses of the performer, rather than the logic of shifting the pattern". He also said that he aims for the latter type of laughter.

On the day of filming, he was told by Miraikan staff that "ChatGPT is controlled by RLHF (Reinforcement Learning by Human Feedback) so that it does not produce dangerous or harmful output", to which he asked, "How can we decide what is 'good' or 'right'?". ChatGPT, which can be said to be hoped for a harmless machine, and Mr. Ozawa, who believes that it makes easier to live as an individual and as a society by avoiding making people feel bad and worrying about oneself (even if they do something bad to you). Are they on the same page?

Interviewing Mr. Ozawa was a very luxurious experience that allowed me to fully feel his kindness and curiosity. There are many words that I couldn't make into a video or a panel, but I want to introduce to you all! For example, he was indignant at ChatGPT for adding "(laugh)" to the blur parts of his comic dialogue, saying that he was running away from comedy, but when the interviewer asked, "Would you tell ChatGPT not to add (laugh)?" he replied, "No, that's already his personality, so let's respect it".

In an interview*1, "At first we were enjoying ourselves making up material, but before we knew it, it changed to, 'If we do this, it will be popular.' Then, it didn't go down well." He said. Since then, he has tried to think on the spot, not to be bound by the expectations that are placed on him, and not to force himself into a mold. This idea of not preparing for what is expected of you but building it on the spot is in line with 'Bricolage (artistically composing)*2', and he expressed this attitude to work in the dialogue with the ChatGPT.

Although it seemed difficult for ChatGPT’s to "create" manzai, by accepting their characteristics and bricolaging them as unknown and different materials, a relationship with him, as described in the last part of the video, was established. The moment of "becoming" a manzai was born.

When I heard the words, "If I'm going to make manzai with ChatGPT from now on, I wanted to make it not with the teasing of 'it's a machine after all' but with the fun of trying to go beyond that". As a planner and a comedy fan, I felt the great potential and professionalism.

Written by OSAWA Kotaro

*1 Job Hunting Journal (2013): 'Ozawa Kazuhiro (Speed Wagon, comedian) on "What is work?”

https://journal.rikunabi.com/p/career/7916.html (Japanese only.)

*2 From French, meaning something like 'to gather together and make your own'. Used by anthropologist Lévi-Strauss in his book 'The Savage Mind'. In modernized societies, "engineering" based on planning and harmony tends to be emphasized, but when considering "unknottable professional skills", I felt that bricolage that is full of "adhocness" and "one-time-ness" is also important, which is never just about it.

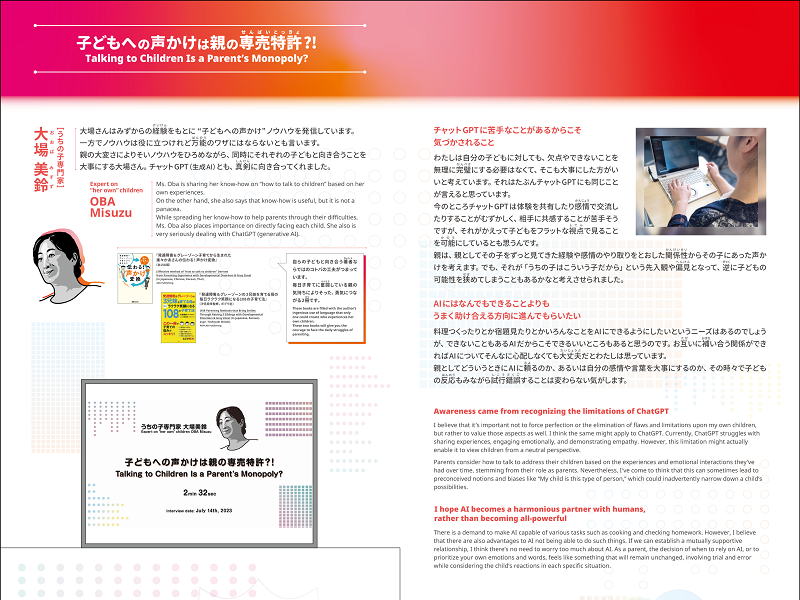

As an expert on "her own child," Ms. Oba is actively using new technologies and sharing her know-how on how to talk to children. On the other hand, she also knows that it takes more than just know-how to deal with children; she also values the difficulty and depth of dealing with children face to face. Therefore, we asked Ms. Oba about how to interact appropriately with ChatGPT and about the significance and limitations of verbalizing her implicit skills using ChatGPT.

During the interview, I could feel her attitude toward ChatGPT, calling it "dear ChatGPT (ChatGPT-san)" and engaging with it as if she were talking with human.

As she interacted with ChatGPT, she noticed its weaknesses, but she never denied them. Rather, she accepted these "weak points" as part of its characteristics and reconsidered them as strong points. Plus, she talked about the potential of ChatGPT and humans helping each other in child-rearing. In the video, you can see her careful choice of words with pauses, which is characteristic of her deep insight into children. This shows that she was dealing with ChatGPT as an equal partner.

*In order to achieve “human-like understanding of the meaning of words,” in which AI has poor performance, there are some studies to teach AI pain and emotion from "experience", or to give AI “a body” using robotics technology.

I was struck by Ms. Oba's insight: Parents have answers on how to talk to their children based on parent’s past experiences, but this may be prejudiced that narrows their children's possibilities. I also have babysitting experience, and in my involvement in child-rearing, I have a few winning patterns of my own, such as "how to get them to daycare quickly without making a detour", "how to get them to take a bath", or "how to get them to stop watching TV or playing video games". These are certainly useful, but I am reconsidering whether they conversely hinder my opportunities to deal better with children. I would like to cherish the children as they are, as well as the time I spend with them.

Written by SETO Shogo

Panel Exhibition

Generative AI using large-scale language models like ChatGPT has made it possible to engage in natural conversations with humans, a task traditionally considered difficult. Some individuals who have experienced or worked on this technology feel that it possesses a form of intelligence that is either similar to or fundamentally different from human intelligence. How will generative AI, which doesn't fit neatly into the category of machines used by humans, impact human creativity and ethics?

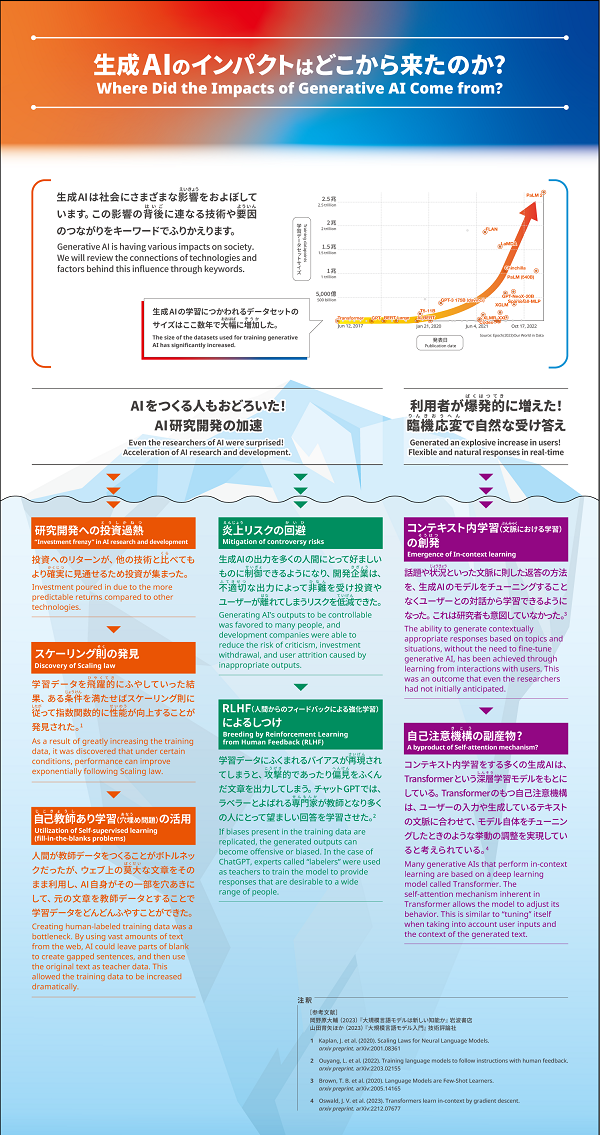

- In November 2022, ChatGPT, a generative AI, was introduced and rapidly gained popularity among the general public. Even the developers themselves did not anticipate that this AI service would have such a significant impact. (*1)

In this panel, the impact of generative AI on both the general public and its developers is metaphorically represented as a part of an iceberg visible above the sea. The panel explains the factors beneath the surface (underwater) that contribute to these impacts. Reflecting on these factors might provide valuable insights for considering the future impacts generative AI could bring.

Additionally, this panel is positioned in the middle of the counterclockwise direction (indicative of proactive utilization of generative AI) and the clockwise direction (indicative of cautious utilization). We believe that viewers' perceptions of the content will vary based on their attitudes toward AI. If viewers have a positive impression, reflecting on the facts of overcoming inhibiting factors one by one might further enhance their anticipation for the future development of AI. On the other hand, those with concerns and anxieties might perceive accidental discoveries as evidence that understanding and control of AI have not kept pace with the speed of technological advancements.

*1The inside story of how ChatGPT was built from the people who made it

Curator's NoteCloseCloseCloseThose who have used ChatGPT may have been amazed by its fluent and natural responses, as if a human were writing in real-time. Investigating how this AI came into existence, I realized that various technologies, innovations, and events have accumulated to make it happen. Furthermore, what fascinated me was not just the systematic and planned progress of technology, but also the emergence of unexpected abilities and the discovery of new laws, indicating that unplanned and surprising developments have occurred.

The panel is structured with three separate storylines, vertically, but to be honest, I wanted to indicate that they are interconnected horizontally as well. However, for the sake of clarity, I organized it in this manner. I believe it's important to consider not only the flow of technological advancement, but also various influences and backgrounds when contemplating the complex relationship between technology, humanity, and society.

Written by NAKAO Kotaro

Predicting the future of technology and its impact on society, not limited to just generative AI, poses a tough challenge. This challenge persists when we retrospectively examine historical developments. Even when the causes and effects appear straightforward and predictable in hindsight, there is an aspect of hindsight bias, where rationales are explored only after the outcomes are already known.

Nonetheless, by concentrating on the factors mentioned on the panel, you may be able to gain a deeper understanding of the characteristics of generative AI and potentially acquire a variety of perspectives on what the future may hold. Whether one perceives ChatGPT as a search service that retrieves information from existing databases and websites, or as a tool that generates new (sometimes inaccurate) information based on patterns inherent in existing data, significantly influences both the expectations and concerns associated with this technology.

Written by ISHIKAWA Yasuhiko

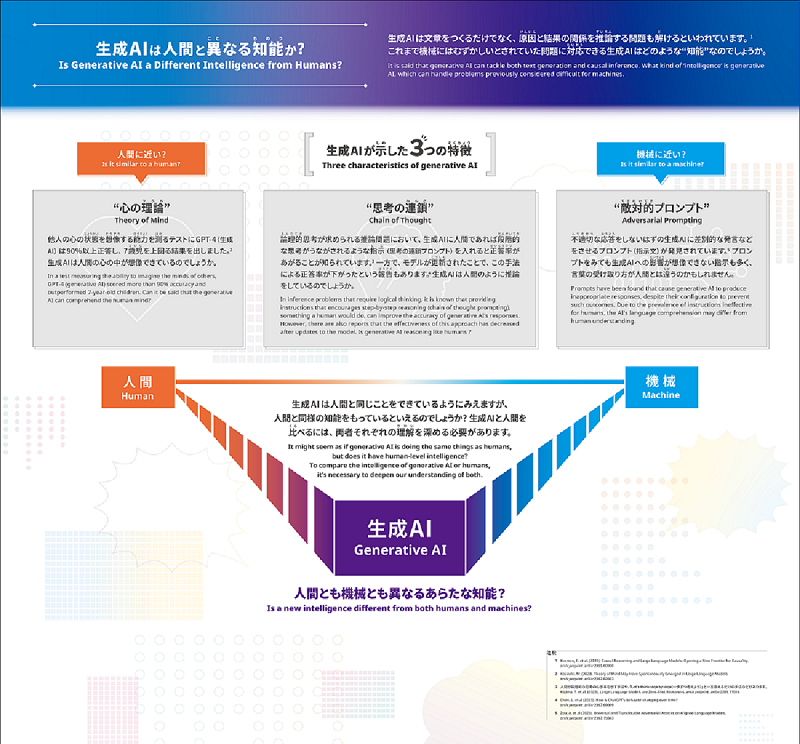

- We have a tendency to anthropomorphize objects, machines, and even moving figures, and to identify their personalities and states of mind. It is no wonder, then, that when we observe the natural and flexible responses of ChatGPT, we might perceive it as having intelligence similar to humans. However, natural responses are possible without intelligence, as long as we know the rules (patterns) that successfully reproduce human responses. Does ChatGPT have human-like intelligence, or does it only appear to have intelligence?

Research exploring the "intelligence" of generative AI utilizing large-scale language models, such as ChatGPT, which flexibly handles diverse tasks, includes investigations into the mechanisms of AI as well as studies that impose various tests on AI to examine its results (responses). These studies include not only tests designed for AI, but also tests intended to measure human intelligence.

This panel presents three examples of AI "intelligence," that makes you think "maybe it's the same as humans," or that makes you think "maybe it's different from humans," and that makes you feel both. With these examples, you can consider whether human and AI intelligence can be compared, and if so, what methods would be appropriate. If they can accomplish the same thing in the end, can we say that they have the same intelligence, even if the mechanisms and processes are different between them?

How can we know how they work in the first place? Do you think that intelligence is inherent in individuals (individuals or AI systems alone) and can be demonstrated at any time? Or do you think what can be done depends on the environment at the time or the people around you?

Depending on how you consider the "intelligence" of AI and humans, your relationship with AI may change in terms of what you entrust to AI and what kind of words (prompts) you give to it.

Curator's NoteCloseCloseCloseMany tests have been conducted to explore the characteristics of large-scale language models by inputting various prompts and comparing their outputs. When such tests show that AI "can think logically" or "understands the human mind," can we really say that AI has the same mechanisms as humans? Or is it just a result that happened to work? Or is it just that we tend to think it works the same way we do, even though it works in a completely different way? We need to pay attention to the human mechanism for interpreting AI behavior, including what such tests are measuring.

Through conversations I had in the exhibition area, I sometimes felt that how one perceives generative AI may reflect one's thought patterns and one's common sense.

Considering the concepts of "schema" and "frame" in cognitive psychology, we are not usually aware of these patterns of our thinking. However, as I asked visitors who thought that we should not impose functional limitations on generative AI to explain why you thought so, we realized that the reason was not the initial explanation of "for convenience and efficiency" but the connection with the underlying belief that we should not limit human potential. This was an experience gained by talking with each other with an interest in our differences, while he himself was not clear about the reasons for his own ideas.

By conversing with people who have different "patterns" from yourself, you can become aware of your own "norm" that you had not noticed before because it was too obvious. I would be happy if you could have many dialogues with various people through this project.

Written by SAKUMA Koki

One visitor asked, "Does this AI reproduce the thinking of typical developmental people? They also asked how to create an AI that matches the various developmental characteristics. I told them that the current large-scale language models collect a huge amount of text data from the Web and probabilistically reproduce patterns contained in the data, so it is thought to reflect overall trends rather than individual cases.

At the same time, my heart was stirred as I felt that I was asked what humanity is, which is the premise of this exhibition panel.

I felt as if I would get lost in a strange cul-de-sac if I did not always keep in mind that thinking about what it means to be human, through AI, is not about creating a uniform definition or model of human nature.

Written by ISHIKAWA Yasuhiko

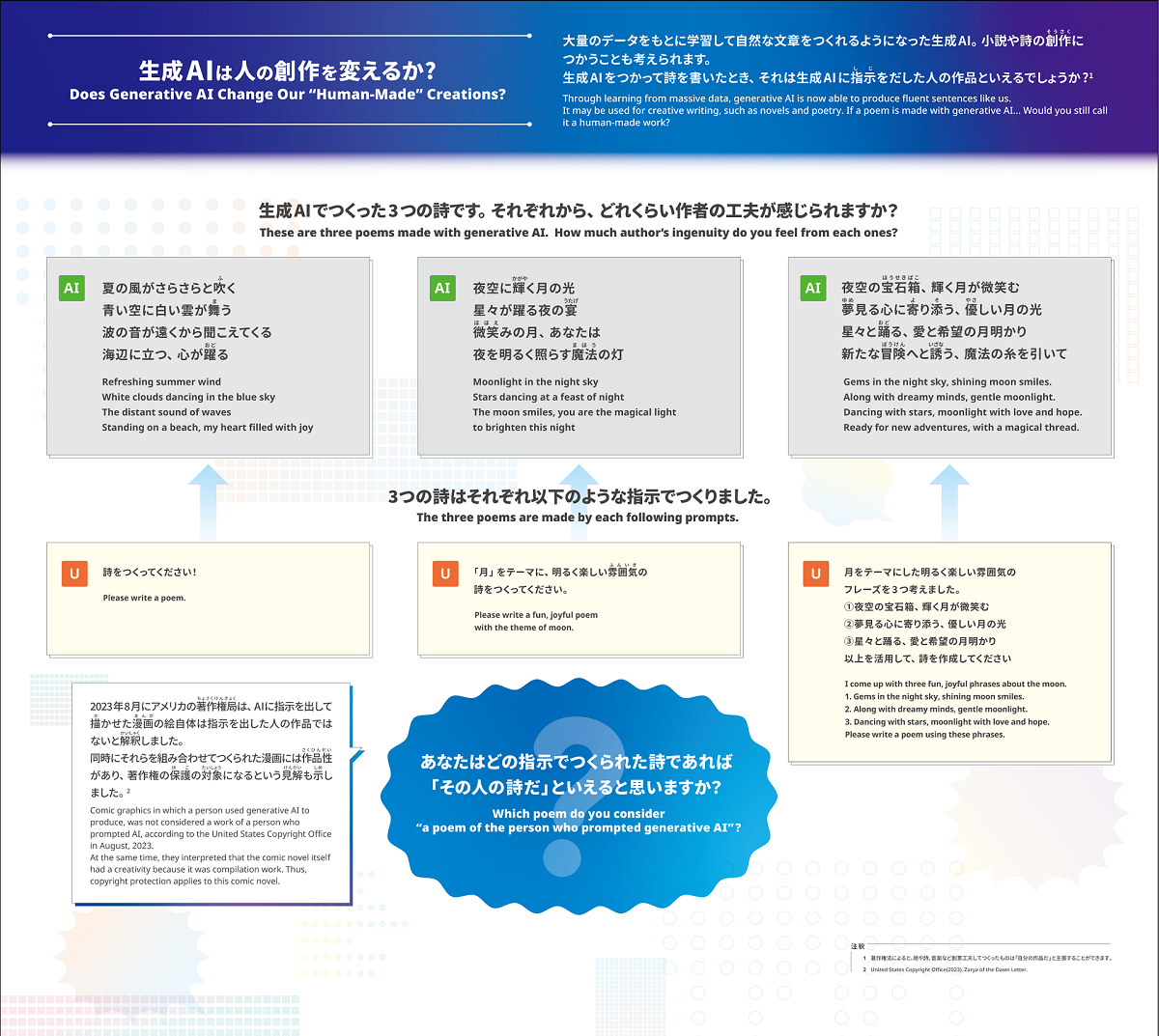

- The boundary between human creation and mechanical generation is being blurred by the rise of generative AI, capable of producing text that resembles poetry, even with basic prompts like, "Please craft a poem."

This panel exhibition raises the question of how specific and detailed a prompt must be in order to be considered a “human creation” directed by generative AI.

In guidance issued on March 16, 2023 (*1), the U.S. Copyright Office indicated that it will not accept works created by AI with human prompting as human creations.

In a public letter (*2) explaining why the bureau dismissed the copyright application of an individual who created a work using Midjourney, an image-generating AI, the bureau stated that the AI "interpreted" the prompts according to different rules than humans, and that the prompts were merely an influence on the AI rather than a direct instruction. For this reason, a distinction can be observed between works created using conventional tools such as paints and image editing software and those created using generative AI.

In the past, providing indirect instructions to staff members in anticipation of their discretion, or developing creative ideas through the interpretation of individuals from different cultures (who do not share common understanding), would not have undermined the creativity (or copyright) of the individual. If we view generative AI as akin to others from different cultures or autonomous entities, we can entertain different interpretations from those outlined above.

In this manner, conventional values and common sense are re-evaluated in the process of integrating the new technology of generative AI into society, bringing about renewed awareness regarding the nature of creative endeavors and copyright management.

*1Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence

*2Re: Second Request for Reconsideration for Refusal to Register Théâtre D’opéra Spatial

Curator's NoteCloseCloseCloseA visitor who had just begun their journey into poetry creation watched a video where a poet TANIKAWA Shuntaro, commented on "poems" generated by AI, saying, "It seems like it's just writing pretty words." Upon seeing this, he confessed, "Perhaps because I've just started writing poetry, I've been caught up in superficial techniques lately. Now, I actually envy AI's ability to produce such straight expressions."

On another occasion, someone involved in creative pursuits queried, "Don't you think creators are satisfied once their work is done? There should be quite a few people, both creators and audiences, who enjoy the process of creating." Perhaps to him, this exhibition panel seemed to be questioning how much a creator can skim before their work is no longer deserving of copyright recognition.

At that moment, I certainly realized that during the production of the panel, I had been focusing on how much human intent was reflected in the completed creative works. However, our engagement with creation should avoid e narrow definitions by the finished "product" alone. Through conversations with these individuals, I was able to shift my focus towards the process of creation and the accumulation of experience that leads to mastery.

On the other hand, processes that do not directly manifest in such outcomes might become invisible within market principles. What kind of "processes" are considered valuable?

Written by OSAWA Kotaro

A visitor from overseas mentioned feeling uneasy about the very question posed by this panel. Upon further inquiry, it became apparent that she had an affinity for contemporary art and didn't necessarily believe that the author's intent being more reflected in the work necessarily guarantees creativity in the artwork.

For example, in "Fountain" by Marcel Duchamp, it's difficult to recognize the author's intent or creativity in the construction of the ready-made toilet bowl itself. However, the act of questioning viewers, "Could this be considered a work of art?" carries a sense of creativity.

With these auxiliary lines drawn, it made me realize that the panel's presupposition of "reflection of the author's intent" regarding the creativity of AI-generated content might actually diminish the concept of creativity.

Yet, discussions on drawing socially acceptable boundaries regarding copyright issues are likely to continue.

Written by ISHIKAWA Yasuhiko

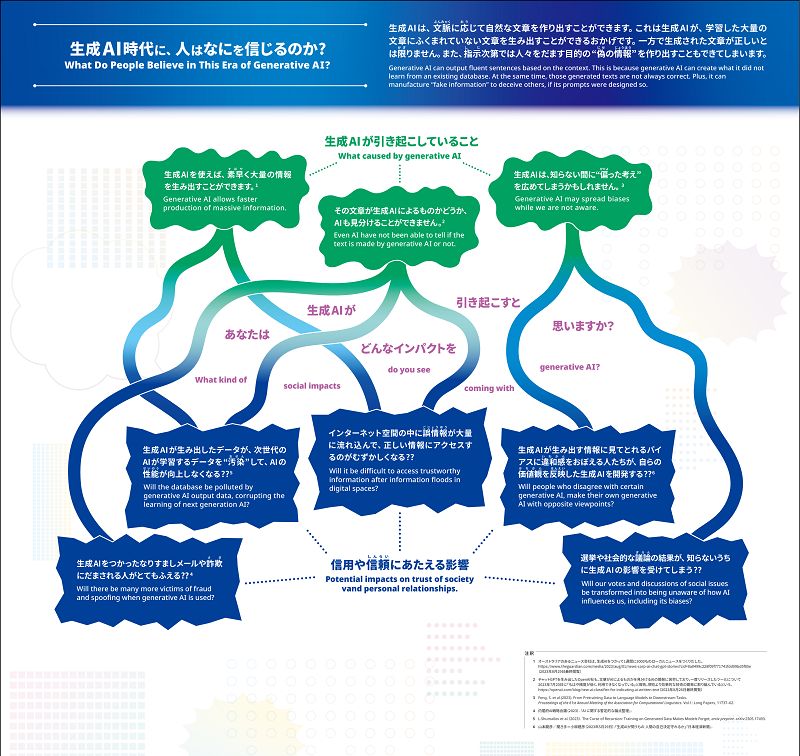

- ChatGPT, capable of generating natural-sounding sentences, is a Generative AI based on Large Language Models (LLM). The remarkable aspect of this LLM is its ability to craft natural and coherent sentences without the knowledge brought by humans. The training process of LLM, known as machine learning, involves utilizing vast amounts of textual data from the web. However, instead of merely copying this dataset, LLM has the capability to “generate” new sentences by combining an extensive array of words. The name “Generative AI” came from this unique feature.

These two features, the ability to generate natural-sounding sentences and the capability to produce content that is not merely copied from the training dataset, create a challenge wherein it becomes difficult for many individuals to discern misinformation when it is generated.

Let's look at this panel from the perspective of someone who is trying to create fake information. The callout at the top of the panel lists the three characteristics of the Generative AI: ①it can generate a large amount of information quickly ②it cannot be identified as text generated by the generative AI ③the bias contained in the generated text is spread out.

The strategy of “Throw enough mud at the wall and some of it will stick” becomes easily achievable with Generative AI, which can quickly produce a large amount of information. If text generated by Generative AI is indistinguishable from human-written text, it may not only “Throw enough mud” but also more widely spread the deception. Regarding "bias," while some may expect AI to generate objective content based on data, if the data itself contains bias, the generated content from AI may inherit that bias.

Looking further at the bottom half of the panel, it suggests significant influences beyond individual assumptions, regardless of someone’s intent to harm. For instance, the task of verifying the accuracy and sources of information, commonly known as fact-checking, might become enormous. This could lead to a situation where obtaining trustworthy information becomes even more challenging than it is now. Additionally, there is the possibility of exacerbating conflicts and divisions between differing ideologies. This could occur through pointing out biases to each other amid conflicting viewpoints, and developing Generative AI aligned with one's own assertions, contributing to further polarization.

Curator's NoteCloseCloseCloseThe venue for this project was divided into two paths for people who view Generative AI positively or critically. This exhibition was designed to make viewers realize other people with different viewpoints as they passed by each other. Our ways of viewing Generative AI are likely to fluctuate by moving between their personal viewpoints and social perspectives such as economics, rules, and systems.

How can we make “solutions” in such world where we live with others? Finding an optimal solution at the individual level doesn't necessarily transfer into an optimal solution for society as a whole, and vice versa. When determining the rules for Generative AI, it is crucial not to resort to leaving it solely to experts or relying on simple and easy conclusions by majority. We believe that our role as science communicators involves fostering an ongoing dialogue that bridges the gaps among "individuals," "those different from oneself," and "society."

Written by OSAWA Kotaro

Post-Exhibition Note

- Mirai can NOW 5th “Professional skills beyond words: Can Generative AI mirror it?” (NOW5), focuses on human skills that cannot be verbalized, commonly known as tacit knowledge. Chat GPT, debuted at the end of November 2022 and rapidly spread, surprised many people by flexibly adapting its responses to users’ instructions. The idea behind NOW5 was to use Chat GPT as a conversational partner to prompt the verbalization of one's own tacit knowledge. The inception of the project began with the thought that using ChatGPT as a conversational partner to prompt the verbalization of one’s own tacit knowledge would be interesting.

When comparing traditional deep learning with the generative AI discussed in this instance of mirroring tacit knowledge, what we noticed once again is that, in the case of generative AI, the degree of reproduction depends not only on the training data and the AI model, but also significantly on the influence of the user's prompts. Text generation AI represented by ChatGPT has markedly improved its understanding of everyday language instructions and has shown the ability to adapt its behavior, on the spot, according to instructions —a capability known as "In-context learning." We believed that this feature might open new possibilities, even in the reproduction of tacit knowledge by AI.

Engaging in conversations with generative AI prompted a sense of discomfort with the articulation of tacit knowledge and its reproduction by AI, leading to new discoveries and insights into one's own skills that cannot be verbalized.

In this project, by showcasing how three professionals utilize generative AI, visitors were encouraged to see generative AI as a mirror reflecting their own skills and underlying values. We believed that by engaging with different perspectives and opinions from others, through dialogues, deeper conversations could be fostered.

To facilitate dialogue, upon entering the exhibition space, visitors were presented with several questions (posted on this special webpage, "Which order would you choose, clockwise or counterclockwise?") and were divided into two viewing routes. By labeling visitors' impressions of generative AI as positive and critical based on their responses to the questions, each route was designed to gradually shift from perspectives aligned with current thoughts and compatibility to more distant viewpoints. This design aimed to raise awareness among visitors who chose to view the exhibition in reverse order.

As Science Communicators, we engaged in conversations with visitors from around the world throughout the venue. Through these interactions, we gained useful insights by understanding the values of the visitors. For example, one visitor, after choosing the route we referred to as the critical route, began viewing panel exhibits. The first panel addressed the impact of generative AI on trust, as it sometimes produces "misinformation”. The visitor calmly commented, "I'm concerned about misinformation. My child isn't going to school, so I'm considering using AI to create problems and teach, but it would be troublesome if incorrect questions or answers were generated."

Later, when I spoke again as the visitor progressed to video exhibits in the latter part of the route, she seemed surprised, saying, "Actually, today I asked AI, 'I feel lonely, what should I do?' and it told me to do my nails, so I did." she then showed me her beautifully manicured nails.

If we had only discussed the importance of accurate information and technical innovations in the exhibition, we would never have heard that this visitor was using generative AI in such a way. When I further conveyed that "Some people suggest using AI as a conversational partner for children." and asked, "What do you think about using AI as a conversation partner for your child, as you're doing (regardless of the accuracy of the information)?" After some thought, she replied, "Well, I might think it's okay for myself, but for children... it might feel scary to feel like they're being manipulated."

This dialogue highlighted how one's thinking can gradually transform as they are inspired by perspectives different from their own.

During the planning stage, we curated questions while imagining scenes where diverse voices of visitors would be expressed. When we actually engaged in dialogue at the venue, we found that the perspectives were even more diverse than expected. Similarly, with new technologies, things beyond what can be anticipated in the research and development stages will become apparent when they are actually used.

In the field of healthcare, care aiming for relief and adaptation become important for disabilities and hardships that cannot be approached within the framework of diagnosis and treatment. There are cases where “Cynefin Framework” is used to organize issues in inter-professional work (IPW). Here, issues are categorized as Simple, Complicated, and Complex, and emphasis is placed on using different approaches for each. We believe that this could serve as a hint when applying science and technology to societal challenges. In the utilization of digital transformation (DX) and AI, it seems common to aim for automation by machines. In such cases, it is necessary to either sort out Simple tasks or simplify Complicated tasks. In Complex tasks, various people with different perspectives are intricately involved. Due to this complexity, since it cannot be controlled as planned, "the correct answer" is not known to anyone. This project focused on the potential of using generative AI for Complex tasks. Some visitors suggested that tasks unsuitable for automation may not be appropriate to generative AI in the first place.

If the purpose is to efficiently perform the same task, it may indeed not be suitable. However, considering ChatGPT’s ability to flexibly capture context based on prompts, it was believed that it could be utilized for verbalizing one's own "skills" and values as implicit knowledge inherent in complex tasks.

Dialogues with others who have skills and values that one could not imagine from their own perspective shake up the values and assumptions that they took for granted. The aim of this project was to update the perspective of capturing issues by being shaken up.

Complex tasks lie in the interaction of various perspectives in complex ways, making it difficult to foresee the "correct answer". To address this difficulty, it is necessary to engage in dialogue with others harboring various positions and viewpoints, and to spend time trying out different approaches. One must resist the temptation of seeking a straightforward "correct answer" and instead tolerate ambiguity. Instead of organizing tasks according to one set of values, is it possible to create a space where mutual influence and changing thoughts can occur while interacting with each other? This was the biggest challenge of the project.

After this exhibition concluded on November 13th, and as the planning team was still basking in its aftermath, shocking news emerged on November 17th (Pacific Time) that the CEO of OpenAI, the company behind the development of ChatGPT, had been dismissed.

Then, on November 21st (Pacific Time), there was a dramatic return of the formerly dismissed CEO. The backdrop to this series of events seems to involve a clash between the advocates pushing for accelerating productivity and economic growth, and the cautious voices expressing concerns about unforeseen impacts due to the pace of technological advancement outstripping societal and individual adaptation.

Through conversations with visitors, we came to realize the varying impacts of generative AI and the diverse perspectives people hold in evaluating it. As we intersected seemingly conflicting voices among the daily influx of visitors, engaging in deeper dialogue, we found ourselves increasingly encountering the complexity and diversity of situations, values, cultures, and historical contexts. What emerged from this was more than a binary conflict between proponents and skeptics, but rather a question of how to govern new changes adaptively within the framework of complex individuals and societies.

Renowned poet TANIKAWA, who collaborated on this project, commented on the AI-generated poems, saying, "They've written something fine-sounding," "A bit too serious," and "There seems to be a kind of logic hidden within, or maybe dwelling." While appreciating and being intrigued by the AI's responses, which differed from his own concept of poetry, he engaged in a dialogue that stimulated him. Watching the video exhibition capturing this interaction, we, along with the visitors, were afforded the luxury of contemplating the question "What is poetry?"

This project endeavored to use generative AI as a mirror to reflect one's values, preferences, allowing unconscious assumptions to serve as a tool for dialogue with oneself and others. On the other hand, there's also the dystopian scenario where generative AI, by producing vast amounts of text, images, audio, graphs, and formulas indistinguishable from human-created ones, could elevate the need of "trust" required in human society to increasingly higher costs, potentially disrupting dialogue and communication.

We would be delighted if this project managed to offer a space where the complex relationship between science, technology, and people, society could be observed from a broader perspective. We hope this would lead to dialogue with others who may not easily agree and non-black and white discussions could find possibilities, significance, and solutions.